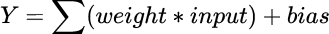

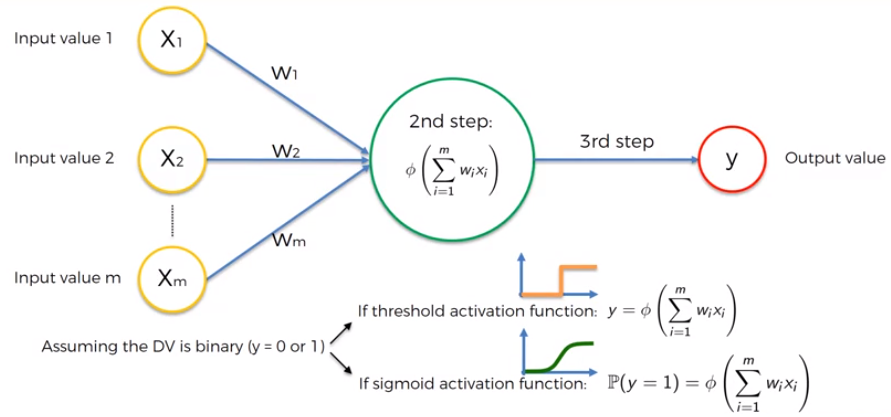

What an artifical neuron do is to calculate a weighted sum of its input, adds a bias and then decides whether it should be “fired” or not.

considering the neuron of the figure above, the value of Y can be anything ranging from -inf to +inf. The neuron really doesn’t know the bounds of the value. How do we decide whether the neuron should fire or not? We use activatioin functions for this purpose. To check the Y value produced by a neuron and decide whether outside connections should consider this neuron as fired or not.

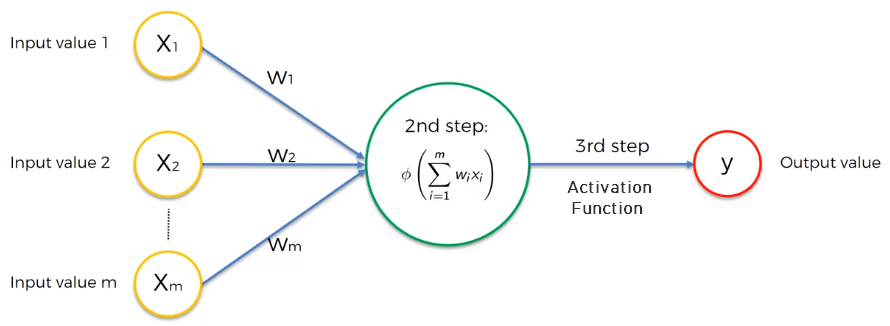

Generally, we have four different types of activation function that we can choose from. Of course, there are more different types, but these four are the predominate ones. The Threshold Function the the simplest function where if the value is less than zero then the function is zero, but if the value is more thae zero the function is one. It is basically a yes/no type ofo function.

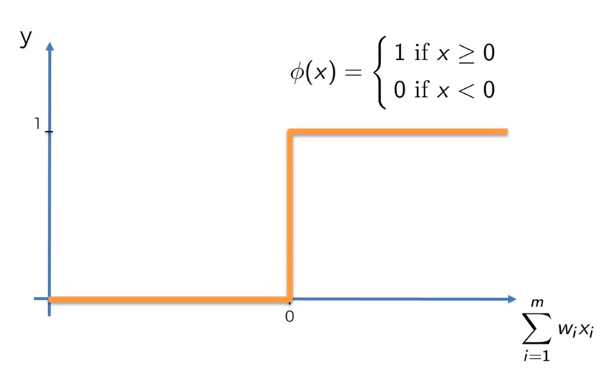

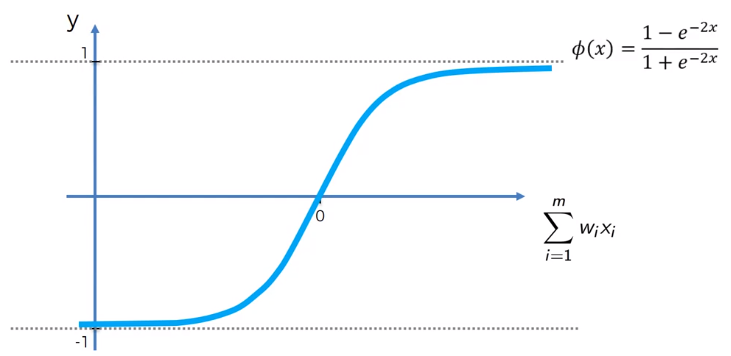

The Sigmoid Function the value x in its formula is the value of the sum of the weighed. It is a function which is used in the logistic regression. It has a gradual progression and anything below zero is just zero, and above zero it approximates to one. It is expecially useful in the output layer, especially when we are tring to predict probabilities. The logistic sigmoid function can cause a neural network to get stuck at the training time. The Softmax Function is a more generalized logistic activation function which is used for multiclass classification. The sigmoid function is used mostly used in classification type problem since we need to scale the data in some given specific range with a threshold.

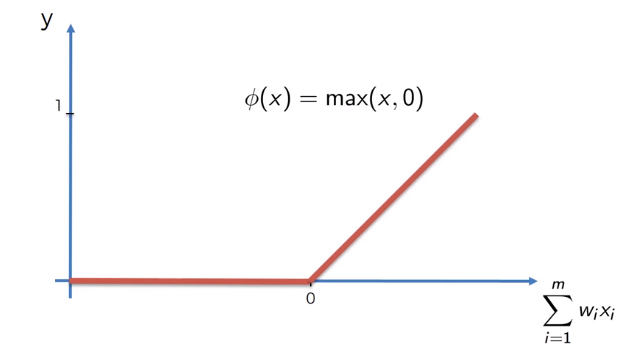

The Rectifier Function is one of the most popular functions for artificial neural networks so when its goes all the way to zero it is zero, and from there it is fradually progresses as the input value increase as well. It is used in almost all the convolutional neural networks or deep learning.

The Hyperbolic Tangent tanh Function is very similar to the sigmoid function, but here the hyperbolic tangent function goes below zero, and that could be useful in some application. The advantage is that the negative inputs will be mapped strongly negative and the zero inputs will be mapped near zero in the tanh graph. The tanh function is mainly used in classification between two classes.

Now, considering for example to have an 0/1 outpu, we can use as activation function the threshold function or the sigmoid function. The advantage of the sigmoid function is that we have the probability of the output to be yes or no.

The sigmoid activation function tells us the probability of the output to be equal to one. What is commonly used is to apply the rectifier activation function for the hidden layer and then the signals are passed on to the output layer where the sigmoid activation function is used, and that will be the final output that predict the probability.